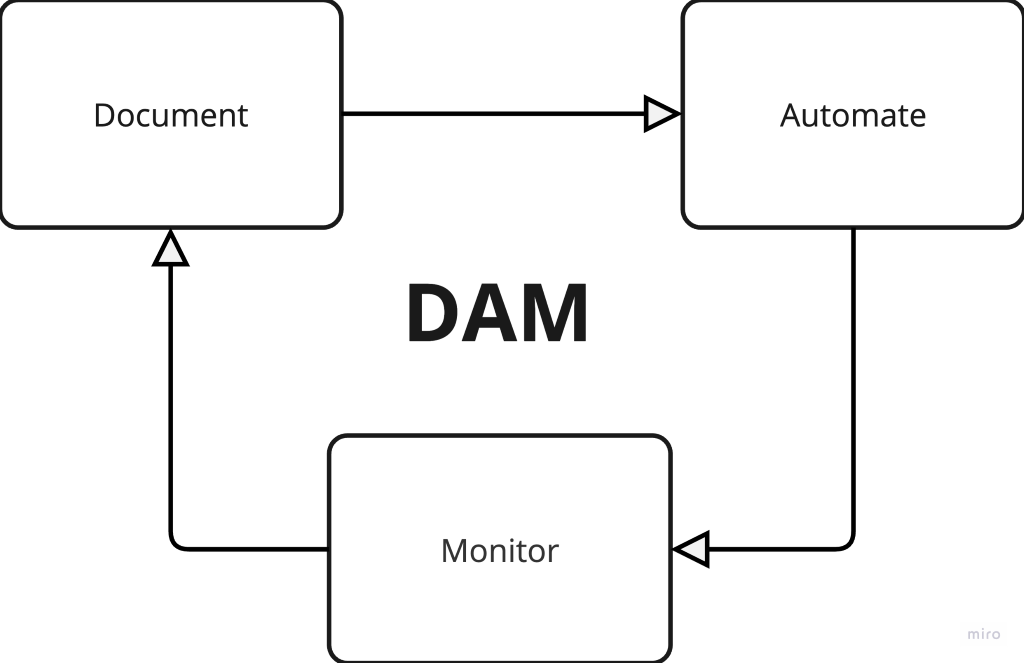

DAM Cycle - Document, Automate and Monitor

Although these ideas might seem basic, I am writing it from my experience working with local fintechs. One interesting thing across different these fintechs is how the different engineering cultures and processes shapes how fast and well product requirements are delivered. For small & midsized organisations, the symptoms of a lack of proper engineering processes usually include:

- Missed product delivery deadlines

- Non-satisfactory implementation of the business requirement,

- Engineers working overtime (inefficiently) without a corresponding increase in the business output

- New code changes causing unpredictable behaviour in older working systems

Sometimes organisations can diagnose these symptoms as evidence of a competence problem. Sometimes that’s actually the case but a lot of time the root cause is usually just an improper engineering process. The lack of these processes also makes it difficult to recognise where competence is lacking. I have reduced these processes into what I call the DAM cycle.

As long as you have fairly competent engineers, embedding the DAM processes could significantly increase the efficiency of your development process. If you think of your software project as a living ecosystem, documentation keeps knowledge flowing, automation removes time-consuming manual tasks, and monitoring ensures continuous health and improvement.

Document.

Application code is the absolute last thing you want to write. At the beginning of a project, it always seems like a waste of time to spend hours documenting. As you deliver the end product, this usually ends up being the difference between timely delivery of the correct implementation and a delivery that leaves stakeholder disappointed.

You shouldn’t expect correctness without clear specifications, and a specification that only lives in the engineer’s head is not worth of being called a specification.

Documenting basically

- Forces you to have clarity of thought before any coding starts,

- Makes it easier to provide a more accurate development timeline since you have thought deeper about the solution.

- Ensures the relevant stakeholders (business, audit and technical) are all aligned with regard to the implementation approach. Usually, stakeholder approval of documentation is required before development progresses. This avoids the blame game of the technology team not delivering according to business requirements. Debates and comments should happen at the documentation stage and not on the code or end product.

- Other solutions consuming your APIs get to know what your API does reducing ambiguity and human interactions.

See sample Technical Specification Document

Automation:

Manually scaling up servers, running deployments and updating production database via the GUI is a recipe for audit disaster, human errors, implementation delays and configuration hell.

Every deployment scripts, migrations, configurations, backfills, database seeding, etc. involved in your product should be versioned controlled, repeatable across environments, and reversible.

By repeatable, I should be able to run the exact same scripts on different environment (e.g development, staging, production) and get the desired outcome.

By reversible, I should be able to rollback the effect of the script on the application or infrastructure to the previous state easily.

Monitor (with alerts):

It goes without saying that you cannot improve what you don’t monitor. You wouldn’t even know which service needs improvement, has frequent downtimes, or has high latency leading to a bad user experience.

Amongst a few others, here are some standard checks you should have in place.

Consistency check:

These are simple cron scripts that just scans the database for inconsistencies and alerts you whenever there are inconsistencies. Examples of inconsistent data could be transactions in a pending state for too long, payment amounts that don’t match the request order amount, outward payment without a disbursement record etc. This ensures your application is self-validating and possibly self-healing.

Health checks:

To track uptime across the different services. Each service should expose a path (e.g./health) that does nothing but returns a response: of 200.

The health-check paths are regularly pinged and if for whatever reason a 200 is not returned for a service it alerts you that the service is down. You can also automate restarts in these scenerio.

Application Error logging and alert

Errors in your application should be proactively sent to you for resolution. Waiting for a customer support ticket to notify you of errors is another recipe for bad customer user experience and unnecessary escalations.

You need to be able to proactively resolve issues before the business or customer escalates.

API latency monitor

Unfortunately, application performance declines over time of being used. Endpoints become slow due to an increase in database records. Additional code changes add overhead. 3rd party services become less reliable at scale. You wouldn’t know which API endpoint is slowing down your entire application or needs optimisation without effective monitors in place. Use tools like Sentry Performance Insight to track this.

Bringing It All Together

Documentation feeds into Automation by clarifying how processes and pipelines should run. Automation reduces manual intervention, enabling developers to focus on higher-level problem-solving and keep documentation up to date more easily. Monitoring closes the feedback loop by informing updates to both your automated pipelines and your documentation. Data-driven insights ensure you’re automating the right tasks and capturing the right details for future reference.

Example Workflow:

A new feature is documented in your central wiki, with architecture diagrams explaining how it integrates with existing services.

Automated CI/CD pipelines are updated accordingly to run new tests and deployments. Monitoring dashboards to track feature usage and performance, alerting the team if latency spikes.

Based on insights from monitoring, you update the documentation to reflect performance constraints or best practices, continuing the cycle. If all the symptoms above still exist after embedding the DAM cycle, then maybe you have a competence issue